Summary

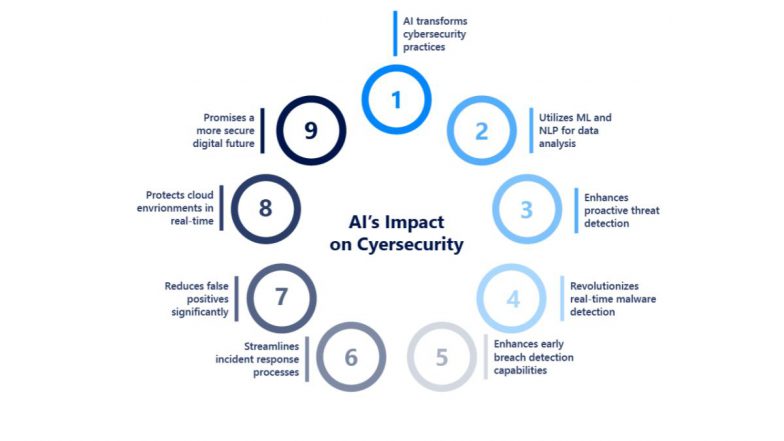

AI’s impact on cybersecurity is significant, offering powerful defensive tools like enhanced threat detection, faster incident response, and predictive analytics, while also presenting new risks like sophisticated AI-powered attacks and challenges in model security.

For defense, AI analyzes vast datasets to identify anomalies and automate responses, such as isolating compromised systems. For offense, attackers use AI to create more convincing phishing attempts and adapt attacks in real-time, leading to an ongoing arms race.

Source: Gemini AI Overview – 10/24/2025

OnAir Post: Impact of AI on Cybersecurity

About

Benefits of AI

- Faster and more accurate detection: AI can analyze massive volumes of security data in real-time to identify anomalies and malicious activity that human analysts might miss. For example, AI can detect unusual login patterns, strange network traffic, or abnormal user behavior.

- Reduced false positives: AI models can be trained to distinguish between genuine threats and benign activities, significantly reducing the number of false alarms that security teams must investigate.

- Predictive analytics: By analyzing historical data and threat intelligence feeds, AI can identify emerging attack patterns and predict potential vulnerabilities before they are exploited.

- AI-driven systems can automatically respond to threats, such as isolating infected devices or blocking malicious IP addresses, to prevent spreading and minimize damage. AI also automates repetitive tasks, allowing human analysts to focus on complex issues and potentially reducing costs.

- AI can analyze user behavior to detect insider threats or compromised accounts, scan for vulnerabilities and prioritize remediation, and enhance authentication through behavioral biometrics.

Source: Gemini AI Deep Dive Overview – 10/24/2025

Risks and challenges

- Attackers use AI for sophisticated social engineering, like personalized phishing and deepfakes. They also create evasive malware that adapts to traditional defenses and automate large-scale attacks.

- AI systems are vulnerable to attacks like data poisoning, where malicious data is used to train models incorrectly, and adversarial AI, which uses crafted inputs to confuse models. Biased training data can also lead to security blind spots.

- Implementing and maintaining AI security tools can be costly and complex, requiring specialized resources. Over-reliance on AI can also reduce human vigilance, making organizations vulnerable to new threats. Ethical concerns around privacy and data collection, as well as the “black box” nature of some AI, also present challenges.

Research

AI has a dual role in cybersecurity: acting as a powerful defensive tool while also being exploited by adversaries for more sophisticated attacks. Top research projects focus on using AI to enhance defensive capabilities and developing countermeasures to malicious AI use.

1. Adversarial AI and defensive AI models

- Offensive research: Researchers explore techniques like “poisoning attacks,” where manipulated training data creates vulnerabilities, or “evasion attacks,” which fool a deployed model into misclassifying malicious input.

- Defensive research: Projects focus on “adversarial training,” which exposes models to malicious examples during development to make them more robust. This research is crucial for AI in critical systems, like autonomous vehicles and healthcare.

2. Automated malware detection and reverse engineering

- Real-world example: Microsoft’s “Project Ire” is a prototype AI agent designed to autonomously detect, classify, and reverse-engineer malware, freeing up human analysts for more complex threats.

- Advanced methods: Current research explores deep learning techniques, including Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), to analyze file behavior and system changes.

3. Behavioral analytics for insider threat detection

- Anomalous activity detection: An AI system might flag an employee who suddenly begins accessing sensitive files late at night or logs in from an unusual location. This approach adapts to normal behavioral shifts and is less prone to the high false positives of static, rule-based systems.

- Insider risk prevention: The research helps organizations detect accidental data misuse, compromised accounts, or malicious insider actions that are often difficult to spot with traditional tools.

4. Privacy-preserving AI, including federated learning

- Techniques: Researchers are advancing techniques like “federated learning,” which allows a model to be trained across multiple decentralized devices without exchanging the raw data, thereby preserving privacy.

- LLM privacy: A key area of concern is Large Language Models (LLMs), with research focusing on preventing the leakage of sensitive data used in their training.

5. AI for security automation and orchestration (SOAR)

- Real-time response: Research explores autonomous response capabilities that allow AI systems to automatically quarantine infected endpoints or block malicious traffic the moment a threat is detected.

- Streamlining operations: Projects develop AI-driven workflows for threat detection, vulnerability management, and other functions to boost efficiency.

6. AI-powered phishing and social engineering defense

- NLP for phishing detection: Researchers use Natural Language Processing (NLP) to analyze the tone, content, and structure of emails to detect sophisticated phishing attempts that traditional filters miss.

- Deepfake detection: Projects focus on developing tools to identify and flag AI-generated deepfake audio and video, especially in scenarios involving financial transfers or sensitive communications.

7. Quantum-resistant cryptography and AI

- AI for cryptographic design: Research uses AI to analyze how quantum computers might attack existing systems and assist in the design of new, more secure cryptographic methods.

- Post-quantum standards: This area is critical as government agencies, like IBM, predict the need to prepare for post-quantum cryptography standards by 2025.

8. AI for IoT and cyber-physical systems security

- Anomaly detection: AI systems monitor network traffic and behavior patterns in industrial control systems to detect anomalies that may indicate a targeted attack.

- Proactive measures: Research explores proactive methods to mitigate cybersecurity challenges in IoT-based networks, such as those used in smart healthcare.

9. AI for threat intelligence and predictive analytics

- Predictive defense: AI-driven predictive analytics enable organizations to move from a reactive security posture to a more proactive one, strengthening defenses before an attack occurs.

- Threat prioritization: Projects focus on using AI to prioritize threats based on potential impact and exploitability, helping security teams focus their resources effectively.

10. AI for vulnerability management

- Automated scanning: AI automates vulnerability scanning and assessment, especially in complex cloud and multi-cloud environments.

- Intelligent prioritization: By analyzing risk factors, AI can help organizations prioritize which vulnerabilities to fix first, focusing on the most critical issues with the highest potential impact.

Innovations

Drawing on recent innovations, research, and industry trends, here are ten of the most significant innovations involving AI and cybersecurity according to Gemini AI Deep Dive:

1. Generative AI (GenAI) for enhanced social engineering

- For attackers: Threat actors use large language models (LLMs) to craft personalized and grammatically flawless phishing emails, text messages, and voice calls (vishing) that are designed to bypass traditional filters and human scrutiny.

- For defenders: AI-powered security solutions use machine learning and natural language processing (NLP) to detect sophisticated social engineering attempts by analyzing metadata, sender behavior, and linguistic cues that deviate from established norms.

2. AI-powered malware and adaptive defense

- For attackers: AI-driven malware can use polymorphic techniques to constantly alter its code, evading signature-based detection. It can also mimic legitimate software to hide its malicious activity and adapt its behavior based on the environment it finds itself in.

- For defenders: AI-powered behavioral analysis and sandboxing observe how files and processes act in real-time. This can reveal malicious intent even when the malware’s code is new or disguised.

3. Predictive threat intelligence

- AI systems analyze massive datasets—including threat feeds, network telemetry, and dark web activity—to forecast likely attack vectors and actor tactics, techniques, and procedures (TTPs).

- For instance, predictive AI can issue a targeted warning to a financial institution about a specific phishing campaign based on patterns observed in another region.

4. Autonomous security operations

- Agentic AI for SOAR: In Security Orchestration, Automation, and Response (SOAR) platforms, AI agents can fully automate incident triage, alert enrichment, and response execution by mimicking human workflows and decision-making.

- Robotics for physical security: AI-powered autonomous patrol units, like the Knightscope K5

, use cameras, AI analytics, and sensors to patrol large areas, detect anomalies, and alert human teams.

5. Explainable AI (XAI) for threat analysis

- By making the reasoning transparent, XAI helps security teams validate AI alerts, debug models, and comply with regulations that require accountability for AI-driven decisions.

- XAI is used in areas like threat detection, incident response, and vulnerability assessment, where understanding the AI’s logic is critical for effective action.

6. AI-driven network traffic analysis

- Using machine learning and neural networks, AI can learn what constitutes normal network activity, enabling it to detect emerging or zero-day threats by flagging subtle deviations.

- These systems can perform real-time analysis, enabling faster detection and response times crucial for mitigating threats like Distributed Denial of Service (DDoS) attacks.

- Risk-based authentication: AI assesses user and device behavior in real time, adjusting authentication requirements based on the assessed risk level. For example, a login attempt from an unusual location might trigger multi-factor authentication (MFA).

- Zero trust implementation: AI helps enforce zero-trust principles by continuously verifying every access request, identifying anomalous behavior, and ensuring least-privilege access is maintained.

8. LLMs for threat hunting and incident response

- LLMs can accelerate threat hunting by automatically analyzing logs, incident reports, and threat intelligence to identify attack patterns and map them to frameworks like MITRE ATT&CK.

- They can also help generate incident summary reports and suggest remediation steps, freeing human analysts to focus on more complex tasks.

9. Adversarial AI for attack and defense

- Attacks: Adversarial techniques can manipulate a model’s input data—for example, by slightly altering a file to make a malware detection model misclassify it as benign.

- Defenses: Defenders use techniques like adversarial training, where models are exposed to deceptive data during training, to make them more robust and resilient against manipulation.

10. AI-powered software supply chain security

- AI Security Posture Management (AI-SPM) solutions continuously monitor developer activity, AI models, and code repositories to flag risks like exposed endpoints or prompt injection vulnerabilities.

- These platforms use AI to scan for vulnerabilities, enforce security policies, and automate remediation actions throughout the development lifecycle.

Projects

Some of the most innovative projects in AI and cybersecurity focus on creating advanced defenses that learn and adapt in real-time, often using generative AI to stay ahead of malicious actors. These projects range from autonomous malware analysis to developing defenses against AI-powered disinformation.

Autonomous malware and threat analysis

- Microsoft Project Ire: An AI agent that uses large language models (LLMs) and reverse engineering tools to autonomously investigate and classify malware.

- Deep Instinct’s DIANNA: A generative AI-powered tool that acts as a virtual team of malware analysts to provide real-time, in-depth analysis of threats.

2. Deepfake and social engineering defense

- Deepfake detection frameworks: Researchers at Virginia Commonwealth University and Old Dominion University are developing an “Uncertainty-Aware Deepfake Detection Framework” to address the growing challenge of detecting sophisticated deepfakes.

- AI for social engineering awareness: Projects use AI to create personalized, AI-generated training scenarios that help employees recognize and defend against social engineering attacks, such as deepfake impersonations of senior executives.

3. AI-native threat intelligence

- AI-native platforms: Companies like SOC Prime are building ecosystems with AI co-pilots trained on massive, proprietary datasets to analyze new threat vectors and automate threat-hunting operations.

4. Advanced fraud detection

- Multi-modal fraud analysis: Systems developed by companies like Feedzai use machine learning to monitor real-time transactions, while others, such as BioCatch, analyze user behavioral patterns (typing speed, mouse movements) to identify and flag anomalies indicative of fraudulent activity.

- AI-enhanced document authentication: Projects are using AI to analyze documents for signs of forgery, helping financial institutions combat identity and document fraud during client onboarding.

5. Autonomous security orchestration, automation, and response (SOAR)

- Context-aware response: Platforms like Palo Alto Cortex XSOAR and Swimlane Turbine use AI to automate incident response workflows, helping understaffed Security Operations Center (SOC) teams respond faster and more accurately to complex threats.

6. Quantum-resistant cryptography

- AI-assisted cryptographic design: These projects explore how AI can help create new, more secure cryptographic methods by analyzing patterns in quantum computing capabilities.

7. Zero Trust for AI

- Adversarial AI research: Projects focus on developing attack and defense mechanisms for decentralized learning frameworks to prevent system manipulation.

- Secure AI pipelines: Research is being done on securing the entire AI lifecycle, from data collection to model training and deployment, using AI-driven monitoring and controls.

8. AI-powered intrusion detection and response systems (IDRS)

- Knowledge-enhanced threat detection: Researchers are using LLMs to develop knowledge-guided models that improve timely anomaly detection, even when data is scarce.

- Privacy-preserving IDRS: Projects combine technologies like federated learning and graph neural networks to develop scalable, privacy-centric intrusion detection systems.

9. Vulnerability management with AI

- Automated vulnerability assessment: AI-driven tools perform continuous security scans and automate reporting, improving the efficiency of patching and remediation efforts.

- Vulnerability detector with conversational assistance: Projects like one at William & Mary and George Mason University are developing LLM-based vulnerability detectors that can not only find and explain software flaws but also suggest solutions.

10. Generative AI for security policy creation

- Custom security policies: Generative AI tools can analyze an organization’s environment and security requirements to generate optimized policies, reducing human effort and error.